One of the questions I left open from my blog’s inaugural post on linear regression was How do you know when you’ve reached convergence?

That led me to learning about \(\epsilon\) (epsilon), or I suppose re-learning, if you count high school calculus, in which I think it made a single brief appearance in the formal definition of limit.

I’m going to take a rather circuitous route to explaining the role of \(\epsilon\) in linear regression, as I kept discovering interesting new things along the way.

Hopefully your attention span is larger than the value of \(\epsilon\) (har har)!

All the small things

\(\epsilon\) can be found in a variety of contexts, but it always represents an infinitesimal: a quantity that it is infinitely small and basically zero, but not zero-y enough to not exist.

In high school calculus, \(\epsilon\) is the difference between

- the value of a function, \(f(x)\), near its limit point, \(x \rightarrow a\), and

- the hypothetical value \(L\) that the function appears to tend toward at the actual limit point \(a\).

As the distance between \(x\) and \(a\) (which is called \(\delta\), delta) shrinks, so does \(\epsilon\), so the upper bounds of the two quantities move in tandem.

You can also use \(\delta\) and \(\epsilon\) to describe derivatives; i.e., if \(dx = \delta\) and \(\frac{dy}{dx} = \epsilon\), you can imagine how shrinking one shrinks the other, and more importantly, that the ideal value for both of them is as close as you can get to zero without vanishing.

Machine epsilon

There is also a quantity that computer scientists call machine epsilon, which defines the smallest number that a given computing environment can represent.

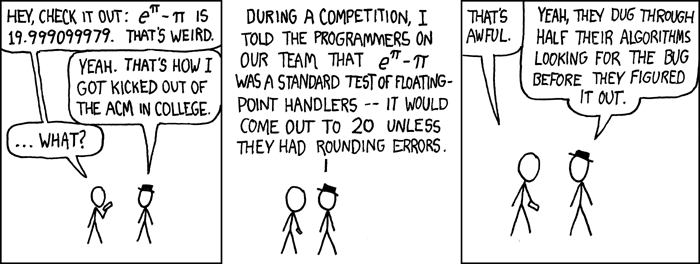

It is the largest \(\epsilon\) that satisfies

which looks like another math koan, or at least some Orwellian “newmath” like \(2 + 2 = 5\).

Actually, that \(+\) should have a \(\bigcirc\) around it: \(\oplus\), giving us

The \(\oplus\) means floating-point addition.

Now, in my programming life, I have not had a need for significant precision until now, with my new interest in fields that require numerical computing.

I also started with Python (well, JavaScript if you go way back), so I never even really had to distinguish between floats and ints in my code—much less think about the consequences or even know the difference, really. I just knew that if you wanted a number with a decimal point, it had to be a float.

Once you get into doing real math with computers, though, you can’t just keep floating along like that, because the limitations of machines come into play.

Floating-point arithmetic

Floating-point arithmetic was devised because computer memory, especially in machines from the very early days of computing, can only allocate a finite amount of resources to data.

Floating-point is basically scientific notation. Let’s consider the mass of a proton in scientific notation (this post isn’t about physics, but as an astoundingly small value, it’s a good example):

The goal of scientific notation is to translate numbers of extreme magnitude into more human-friendly terms. But computers don’t operate like humans do. So how can we translate this number into computer-friendly terms?

First, computers use binary, not base 10 (decimal system). Of course, we know that, but what does that actually mean? I always thought binary sequences were random collections of ones and zeros—as if computer programs were like canvasses assaulted by a digital Jackson Pollock armed with buckets of bits and bytes.

![(source: Ephert [CC BY-SA 4.0] https://creativecommons.org/licenses/by-sa/4.0, from Wikimedia Commons) binary counter](https://upload.wikimedia.org/wikipedia/commons/7/75/Binary_counter.gif)

I am not well-versed enough in binary to explain this in words, but you can definitely see some sort of pattern in the movement of the values here.

Anyway, let’s rewrite the mass of a proton in binary (scroll sideways, it’s a long one):

0.0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000100001001000010

And in binary scientific notation (sort of):

Almost there. Let’s take a look at how computers actually store floating-point numbers:

Every number is allocated 64 bits of memory (this can vary, but let’s stick with 64). There are 52 bits for the mantissa (computer science term for significand), 11 for the exponent, and 1 for the sign (positve or negative). Each bit can be 1 or 0, which is the whole reason for using binary in the first place.

Storing the mantissa, then, is just a matter of finding a way to fit the relevant sequence of ones and zeros into the 52 slots allocated for it. If there are too many digits, chop them off; if there are too few (as in this case), add padding. This is where floating-point differs from scientific notation.

Looks like a lot of zeros. Incidentally, the method to obtain a base-10 number from this can be expressed mathematically in a cool way:

where \(\textrm{bit}_n\) is the binary value of the bit at index \(n\) (from the left, starting at 0), \(p\) is the precision (number of bits), and \(e\) is the exponent (which just happens to be \(-51\) here; it has nothing to do with the number of bits for the mantissa).

Okay, that was fun. Now what?

Rounding errors

If there are too many digits, chop them off. This opens up a big can of worms. Or an infinitesimal one, given the topic at hand (har har).

In exchange for the computability (read: speed and power) and storeability of floating-point numbers, we have to accept a limit to their precision. The digits of \(\pi\), for example, repeat endlessly. We can’t expect a computer to handle them all, or a calculator, for that matter.

Even totally pedestrian numbers like \(\frac{1}{3}\) go on forever. This means that computers cannot reason about fractional quantities in the way that the human mind can.

That is, unless you are working in some baller language like Clojure that has Ratio data types:

(/ 1 3) ; 1/3

(type (/ 1 3)) ; clojure.lang.Ratio

(+ 1 (/ 1 3)) ; 4/3

1 + (1/3)

# 1.3333333333333333

Most languages seem to have implemented some sort of corrective mechanism that works in simple cases, but notice what happens with the following expressions in Python:

1/3 + 1/3 + 1/3

# 1.0

7 * (1/7)

# 1.0

1/7 + 1/7 + 1/7 + 1/7 + 1/7 + 1/7 + 1/7

# 0.9999999999999998

7 * (1/7) == 1/7 + 1/7 + 1/7 + 1/7 + 1/7 + 1/7 + 1/7

# False

Keep in mind that we’re working in binary here. Unexpectedly (if you are not proficient in binary), many “simple” numbers that don’t repeat in decimal do in binary:

All of this leads to floating-point rounding errors that can quickly snowball into massively erroneous output over many iterations. Here’s the example from the Rachel Thomas lecture. Start with \(x = \frac{1}{10}\) and keep applying the function to the output you get:

If you do this by hand you get

but when you try to execute this on a computer:

def f(x):

if x <= 1/2:

return 2 * x

elif x > 1/2:

return 2 * x - 1

x = 1/10

for _ in range(80):

print(x)

x = f(x)

(use 'clojure.pprint)

(defn f [x]

(if (<= x 0.5)

(* x 2)

(- (* x 2) 1)))

(pprint (take 80 (iterate f 0.1)))

it doesn’t take long before the value converges on 1, which is very bizarre.

Granularity

Limited storage space leads to limited precision. A related consequence of this is that floating-point numbers are discrete, not continuous like the number line we picture in our minds. (As a kid, I think I imagined a spectrum. Fun? Yes. Accurate? Not sure.) Computers are capable of pretty precise calculations, but not perfectly precise.

Think of floating-point numbers like pixels. While it is true that computer displays have become less and less “pixelly-looking” over the years, and text rendered on a Retina screen can almost look like a printed page, we know that such output still consists of pixels.

The same is true for floating-point numbers. They allow for some degree of precision, but the number line they form is more like a dotted line than a solid line. (Even stranger, the density of the line changes at different scales, but I’ll leave that one for someone else to explain!)

Take the binary number 1.0 (regular binary, not floating-point). This is equal to the decimal number 1 as well. If we keep moving the 1 to the right and adding zeros accordingly, the value is halved:

It is pretty clear that with 52 slots for binary values, you are going to run out of room at some point—even with the orders-of-magnitude wiggle room that the exponent provides.

This means that after enough iterations, a value can no longer be halved. That unhalvable value is the smallest difference that that computing environment can represent, and it is the distance between a given number and its closest possible neighbor on the floating-point number line.

You could think of that as the size of a ”number pixel.” The whole pixel has the value of (say) its left edge, and any quantity that falls within the space of the pixel gets rounded to the edge of that pixel or the next one.

Machine epsilon, then, is the largest quantity that is less than the width of a number pixel. So it makes sense, then, that \(1 \oplus \epsilon = 1\).

This means that machine epsilon is the largest possible rounding error of a floating-point system.

It also means that on our continuous human number line, as soon as you move rightward of machine epsilon, you have entered the territory of the next machine-perceptible number. That is how machine epsilon defines the smallest possible difference that the system can represent.

Calculating machine epsilon

Before I started writing this post, I just wanted to see the code to calculate machine epsilon. But I didn’t fully understand what was going on, and my dissatisfaction with that led me to go back through all the stuff I just explained. (Of course, it didn’t help that I wasn’t sure about the syntax of loop in Clojure 🙈)

; Source: https://github.com/log0ymxm/gorilla-worksheets/blob/master/src/machine-epsilon.clj

(loop [k 1 s 1.0]

(if (or (<= (+ s 1.0) 1.0)

(> k 100))

[(dec k), (* 2.0 s)]

(recur (inc k) (* 0.5 s))))

; [53 2.220446049250313E-16]

This loop is trying to find the unhalvable value I wrote about earlier. Start with s = 1.0 and keep halving that (moving the binary 1 rightward) until (<= (+ s 1.0) 1.0) or \(1 + s \leq 1\). That is, until the computer no longer recognizes s as having value.

Once that happens, you’ve gone too far and fallen within the bounds of a number pixel. To find the edge of the next pixel—that is, the next adjacent perceptibly different number—move the binary 1 left by one place (in decimal, that’s multiplying by 2).

That’s the reason for the final (* 2.0 s) once the terminating condition is met.

k tells us that the 1 is in the \(k\)th place after the decimal point. Here, that’s the 53rd place. That’s no surprise; we know it’s a 64-bit number. But k could be larger or smaller depending on the precision of the floating-point system, with higher values meaning more available places and thus higher precision.

Julia, NumPy, and R have built-in ways to find machine epsilon (or rather, the value just a hair larger than machine epsilon). Of the three, Julia’s value is the most precise (to the same level of precision as Clojure above).

# source: https://docs.julialang.org/en/v1/manual/integers-and-floating-point-numbers/index.html

julia> eps()

2.220446049250313e-16

julia> eps(Float32) # 32-bit (single-precision) number

1.1920929f-7

julia> 1 + eps() / 2

1.0

# source: https://stackoverflow.com/questions/19141432/python-numpy-machine-epsilon

>>> import numpy as np

>>> np.finfo(float).eps

2.22044604925e-16

>>> np.finfo(np.float32).eps # 32-bit (single-precision) number

1.19209e-07

>>> 1 + np.finfo(float).eps / 2

1.0

```

```R tab=

# source: https://stackoverflow.com/questions/2619543/how-do-i-obtain-the-machine-epsilon-in-r

> .Machine$double.eps

[1] 2.220446e-16

> 1 + .Machine$double.eps / 2

[2] 1

Just for completeness, this is that value in math notation:

So, even though I don’t immediately see myself caring about the specific value of machine epsilon (as opposed to its implications), that’s pretty neat.

Speaking of the implications of \(\epsilon\)—of the machine and non-machine variety—I have to bring the discussion back to what brought me to \(\epsilon\) in the first place: convergence in linear regression.

Linear regression

\(\epsilon\) has two meanings in linear regression depending on whether you are creating the model or using the model.

Convergence threshold

Previously, we performed stochastic gradient descent in a way that we could see the cost decreasing with every iteration, but we still had to adjust the number of iterations manually and judge convergence by looking through the output to find the point where the next iteration wasn’t really worth it.

It’s conceptually very simple to have the computer do this for you. On each iteration, just keep track of the cost obtained after the previous iteration and compare it to the current cost. If the difference is below a certain threshold value, stop iterating.

In this context, the threshold value is called \(\epsilon\). Something like \(0.0001\) might be adequate.

Let’s take first part of the code from the last post:

import numpy as np

import sklearn.datasets

X, y = sklearn.datasets.make_regression(n_samples=500, n_features=5)

X = np.hstack((np.ones((X.shape[0], 1)), X)) # padding for bias column

y = np.vstack(y) # this just fixes a quirk of sklearn's output

weights = np.zeros((X.shape[1], 1))

def cost(X, y, weights):

num_samples = X.shape[0]

o = np.ones((num_samples, 1))

errors = X @ weights - y

cost_array = (o.T @ np.square(errors)) / (2 * num_samples)

return cost_array[0][0]

and modify our train_model function to show us the difference between the current and the last cost on every 50th iteration, and train for 1500 epochs.

def train_model(X, y, epochs, learning_rate=0.01):

weights = np.zeros((X.shape[1], 1))

num_samples = X.shape[0]

last_cost = 0

for i in range(epochs):

errors = X @ weights - y

step_distance = (X.T @ errors) / num_samples

weights -= learning_rate * step_distance

this_cost = cost(X, y, weights)

if (i % 50 == 0):

print(i, abs(this_cost - last_cost))

last_cost = this_cost

return weights

train_model(X, y, 1500)

At 600 epochs, the difference falls to about 0.001 and at 700 epochs, it falls to about 0.0001. Considering the difference between the initial 20 iterations was in the hundreds, I think 0.001 is a sufficiently good \(\epsilon\).

Let’s rewrite the function to stop training based on a value of epsilon rather than a number epochs:

def train_model(X, y, epsilon=0.001, learning_rate=0.01):

weights = np.zeros((X.shape[1], 1))

num_samples = X.shape[0]

last_cost = 0

epochs = 0 # just to know the value; not critical for training

while True:

epochs += 1

errors = X @ weights - y

step_distance = (X.T @ errors) / num_samples

weights -= learning_rate * step_distance

this_cost = cost(X, y, weights)

if abs(this_cost - last_cost) < epsilon:

break

last_cost = this_cost

print(f"Completed {epochs} epochs of training.")

print(f"Final cost: {cost(X, y, weights)}")

return weights

train_model(X, y)

# Completed 612 epochs of training.

# Final cost: 0.05001815274191081

Nice!

Random error

I sort of lied when I said linear regression is

I mean, it is. But that function gives the idealized, predicted value, the \(\hat y\). The real value is

(And in statistics they use \(\beta\) rather than \(\theta\), just to keep you on your toes.)

This \(\epsilon\) is called the random error, which sounds like the consequence of a really sloppy programmer, but is simply a way of dealing with the unavoidable fact that no model can be perfect.

Remember how we used the actual error (\(\hatY - Y\)) to compute the distance between our hypothetical line of best fit and each data point? The random error is basically saying that

- The regression line is the line that best fits—not perfectly fits—the data used to create it, and

- Because of that, predictions made with the model are not likely to fall directly on the line.

That doesn’t mean they won’t, but we can’t know for sure, and it’s not likely. This uncertainty is captured by \(\epsilon\) (which we should hope is a small value, if not infinitesimal 😅). \(\epsilon\) is considered to be taken from

- a normally distributed (bell curve) set of values

- with a mean of 0

The second property makes sense; our line is the best-fitting line, so its underestimates should equal its overestimates). The first property is arbitrary—you could choose any kind of probability distribution, but a bell curve is apparently the simplest one that is correct enough frequently enough.

This is more of a theoretical shim than anything that concerns atually coding a model, but it’s still an important variable.

In a future post, I will talk about a topic that takes \(\epsilon\) to the next level. Stay tuned!

References

- Floating Point/Epsilon, Wikibooks

- Floating-point arithmetic, Wikipedia

- B6014 Managerial Statistics: Linear Regression, Columbia Business School

- Assumption of a Random error term in a regression, StackExchange Mathematics